How To Scrape Web Pages At Scale

Deep dive into scalable web scraping with Python, using asynchronous methods and advanced parsing, you'll be able to efficiently extract data from many web pages.

Asynchronous Web Scraping

Asynchronous web scraping in Python involves the use of asynchronous programming techniques to fetch data from the web without blocking the execution of the program while waiting for responses...More

Alright, team! We've got a big challenge ahead of us today.

We're going to learn how to scrape web pages at scale using Python. I know it sounds technical, but trust me, it's going to be a game-changer for our loan management system at Bank of Yu.

So, picture this: we're sitting in a cozy local coffee shop on a Wednesday, sipping our favorite brews, and diving deep into the world of asynchronous web scraping.

We're going to install the necessary packages, like AIOHTTP and Beautiful Soup, to make our lives easier.

But it doesn't stop there. We're going to scrape multiple web pages, add our own custom HTML parsing logic, and even explore LangChain's document loaders for web scraping. By the end of this tutorial, we'll have all the tools we need to gather crucial data and calculate those important KPIs our boss is always asking for.

This course is a work of fiction. Unless otherwise indicated, all the names, characters, businesses, data, places, events and incidents in this course are either the product of the author's imagination or used in a fictitious manner. Any resemblance to actual persons, living or dead, or actual events is purely coincidental.

Asynchronous programming in Python offers a robust method for performing web scraping tasks with high efficiency and speed.

Initially, it's essential to install several Python libraries that facilitate asynchronous programming and web scraping. These include libraries for HTTP requests, asynchronous I/O, and HTML parsing, providing the foundational tools needed for effective asynchronous web scraping.

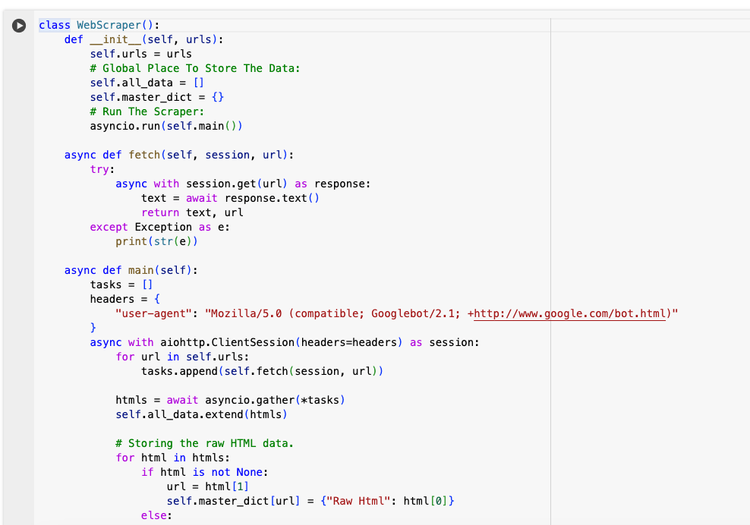

The core of an asynchronous web scraping script involves defining an entry function, often named main, which orchestrates the scraping process. Within this function, an asynchronous client session is created for making HTTP requests. A typical operation includes sending a GET request to a target webpage and processing the response, which involves examining status codes, headers, and the HTML content.

Execution of asynchronous tasks is managed using Python's asyncio.run method, which initializes an event loop to handle tasks concurrently. This approach significantly reduces the time required to scrape data from websites by enabling simultaneous requests.

For tasks involving multiple webpages, the asyncio.gather method proves invaluable. It allows for the parallel execution of multiple asynchronous tasks, such as fetching the content from a list of URLs, enhancing the efficiency of data retrieval.

An example implementation might feature a WebScraper class designed to handle the scraping of multiple URLs. This class typically includes a method for fetching webpage content and a main method to manage the asynchronous execution of these fetch operations.

To further refine the scraping process, HTML parsing libraries can be employed to extract specific data from webpages. This enables targeted scraping, such as extracting titles for SEO analysis or specific content for data analysis.

Hey, welcome back. So in this video, we're going to cover asynchronous web scraping using Python, and also towards the end of this video, we'll cover how you can do that. Using Lang chain. , so the first thing we're going to do is install a couple of different packages, a beautiful soup for a we're going to install requests.

Complete all of the exercises first to receive your certificate!

Share This Course